Reducing idle power consumption for Nvidia P100 and P40 GPUs

One overlooked aspect of GPU usage is the power they consume when idle. Idle power draw refers to the amount of electricity a GPU consumes when it's not performing intensive tasks. This can significantly impact both energy consumption and electricity costs over time.

Without any tricks, a P40 with VRAM loaded can burn 45W at idle. With some tweaks, this idle power can be reduced to around 10W.

Idle Power Draw: 10W vs 45W

Let's consider the impact of the difference between a 45W and 10W idle draw. While the difference might seem small at first glance, the cumulative effect over a year can be substantial.

Annual Energy Consumption

To calculate the annual energy consumption, we use the formula: Energy = Power × Time / 1000

Assuming the GPUs are idle 24 hours a day for 365 days a year, we get:

- 10W GPU: 10W × 24 × 365 / 1000 = 88 kWh

- 45W GPU: 45W × 24 × 365 / 1000 = 394 kWh

Annual Cost of Electricity

The cost of electricity can vary substantially from place to place, but where I live it is approximately $0.25 per kWh. Which then gives the annual costs as follows:

| GPU Idle Power Draw (W) | Annual Energy Consumption (kWh) | Annual Cost ($) |

|---|---|---|

| 10 | 88 | $22.00 |

| 45 | 394 | $99.00 |

Table 1: Annual cost comparison of P40 idling at 10W vs 45W

The difference in idle power draw between 10W and 45W might seem minor on a per-second basis, but over the span of a year, it results in significant energy consumption and cost differences, especially when you put multiple GPUs in a system.

P40 idle state quirks

The P40 has only P0 and P8 states and idle draw can be as low as 10W when VRAM is empty, but the P40 seems to have a quirk when content is loaded into VRAM: the power draw can be 45W even when the GPU is performing no work.

Luckily, there are ways to work around this and reduce idle power draw by directly adjusting pstates.

Reducing idle power draw by directly adjusting pstates

A library and CLI utilities to manage pstates here:

and daemon:

Patches to automatically drop pstates while idle for llama.cpp and vLLM are available here:

There's also a separate project that aims to do something similar called gppm, which aims to handle multiple cards and llama.cpp instances independently.

P100 has no pstates

The P100 is a datacentre GPU that was originally designed for training workloads. Since the target workload aimed at continuous maximum utilization, these GPUs have no low power pstates.

Even at idle with no data loaded into VRAM, these can consume just under 30W of idle power. Put four of them in a server and you have 120W of idle power just for the GPUs.

| GPU Idle Power Draw (W) | Annual Energy Consumption (kWh) | Annual Cost ($) |

|---|---|---|

| 120 | 1,051 | $263.00 |

Table 2: Annual cost of running 4xP100s at idle power

Given this power profile, you would choose P100s if:

- You expect to have high utilization with little idle time

- You want to run computations in batches and will turn off the server when batches are done

- You want the server to double as a space heater or have money to burn

Since the P100 is not very popular for home use due to this idle power issue and having only 16GB of VRAM compared to the P40's 24GB, the prices of P100s on the 2nd hand market have remained relatively low even as the P40 prices have skyrocketed.

But what if...

One last possibility of power saving is to mount the GPU onto a riser to enable disconnection of power to the GPU and then performing PCIe hot-unplugging to save power. This could theoretically save power at the expense of start-up latency.

Getting PCIe hot-plugging to work on consumer grade hardware maybe challenging and frustrating (massive understatement alert).

What about operating power?

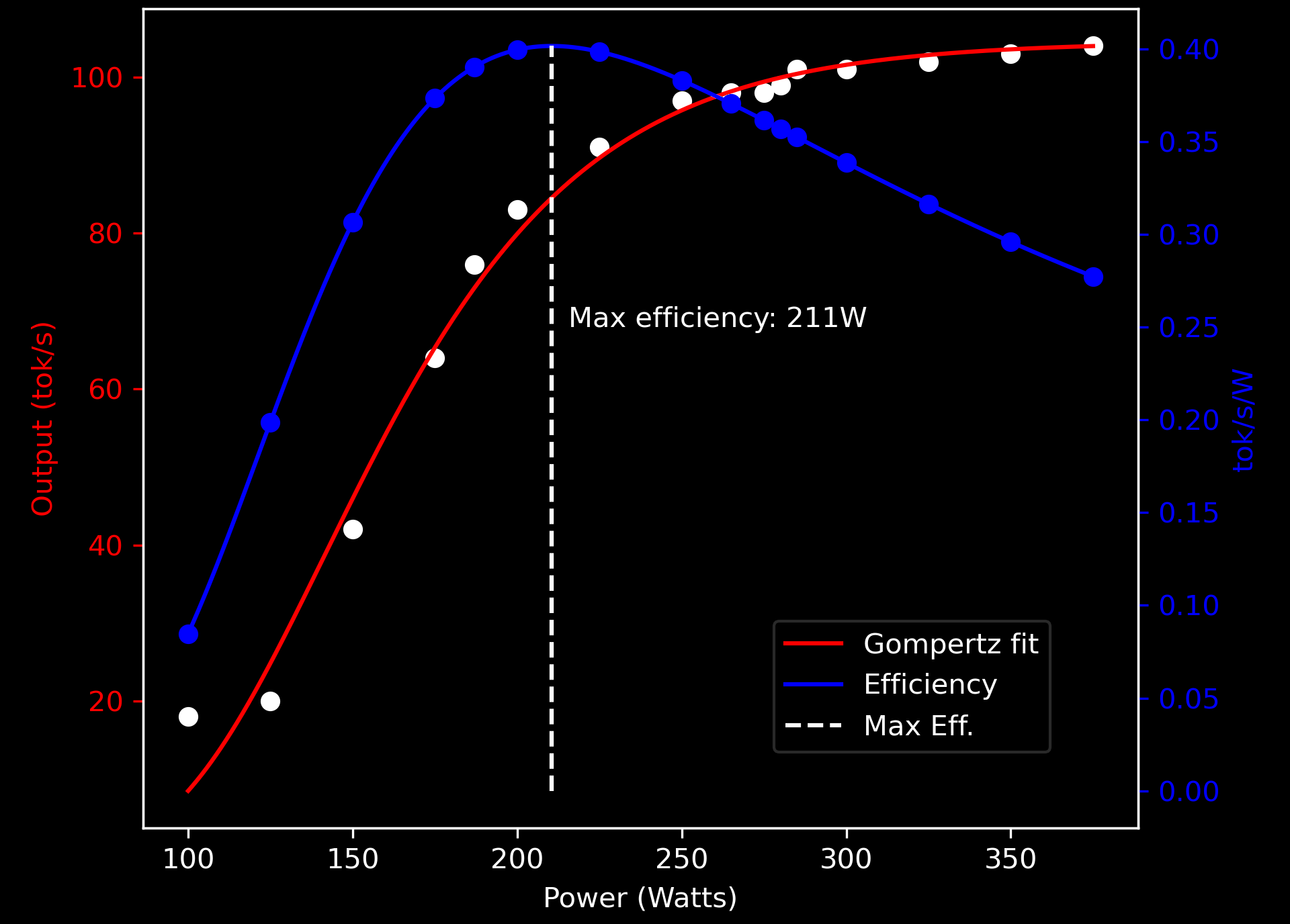

Idle power is only only one aspect, see this article on how to manage active power to maximize efficiency:

Cooling GPUs

One final challenge with re-purposing these datacenter GPUs for home use is that the cards do not have active cooling, instead relying on forced air cooling from the server.

Cooling these cards also has certain factors to be considered and is not straight forward - or at least not if you don't want hairdryer levels of screaming fans in the server. Subscribe using the link below to get our guide on the options for cooling these GPUs while retaining your sanity!

Readers' comments

Thanks for the inspiration.

I just updated someone else's repo (PR pending approval) to give .net control of the same API that nvidia_pstate is using because unfortunately the python script didn't enumerate my Tesla GPUs.

Here's my fork of the .net wrapper: https://github.com/maz-net-au/NvAPIWrapper

You can control it like this: (8 is for P8, use 16 to restore the default, auto-switching mode)

PhysicalGPUHandle[] handles = GPUApi.EnumTCCPhysicalGPUs(); foreach (PhysicalGPUHandle ph in handles) { GPUApi.SetForcePstate(ph, 8, 2); // the 2 is from nvidia_pstate python script }I'm keeping the units at P8 and watching for GPU utilization, allowing P0 for 2 mins after the last poll detected utilisation above 10%. I.e. as soon as you start inference, I allow the cards to switch to P0 and if unused for a couple of minutes, it forces them back to P8.

My frankenstien's monster of a Dell R720XD has 2x Tesla P40's and 2x Tesla T4's in it and if I leave llama.cpp and ComfyUI both running, just the idle P0 power usage heats up the compute units and runs the chassis fans at 80%. This is all a convaluted fix for the issue of not wanting to piss off my wife with the soothing hum of server fans.